AI Meets Emotions: Can Technology Really Understand Human Feelings?”

Discover how AI is evolving to understand human emotions. Explore emotion AI, its real-world applications, limitations, and ethical implications in this in-depth guide.

TECH TALES

Deepita

4/16/20256 min read

In an increasingly interconnected world, emotional intelligence (EQ) has emerged as a vital component of effective communication, leadership, and interpersonal relationships. Defined as the ability to recognize, understand, and manage both our own emotions and those of others, EQ is a cornerstone of human interaction that fosters empathy, conflict resolution, and collaboration.

As artificial intelligence (AI) becomes more integrated into our daily lives—from virtual assistants and customer support bots to healthcare and education platforms—the question arises: can machines learn to understand and respond to human emotions? The development of emotional AI, or affective computing, seeks to bridge this gap by enabling technology to detect, interpret, and even simulate emotional states.

Understanding human emotions isn't just a fascinating scientific endeavour; it's a practical necessity. For AI to seamlessly interact with people in meaningful ways, it must be equipped with the ability to read subtle emotional cues and respond appropriately. Whether it’s a virtual therapist gauging a patient's mood or a car detecting driver fatigue, emotional awareness in AI could revolutionize the way we engage with technology. But how close are we to achieving this goal, and what challenges lie ahead?

Understanding Human Emotions

Emotional intelligence, often abbreviated as EQ, refers to the capacity to identify, understand, manage, and influence emotions—both one’s own and those of others. Unlike IQ, which measures cognitive abilities, EQ emphasizes self-awareness, empathy, emotional regulation, and social skills. These traits are essential in everyday human interaction and play a significant role in decision-making, conflict resolution, and relationship building.

Humans express emotions in a multitude of ways, ranging from facial expressions and body language to tone of voice and choice of words. These expressions are often unconscious and instinctual, yet they carry rich information about a person’s internal state. People are naturally adept at picking up on these emotional cues and adjusting their behaviour accordingly.

However, the interpretation of emotions isn’t universally standard. Cultural norms, societal influences, and individual experiences shape how emotions are expressed and perceived. For instance, a smile might indicate happiness in one culture but could also be used to mask discomfort in another. These subtleties add layers of complexity to the emotional landscape—challenges that AI systems must navigate to truly grasp human feelings.

The Evolution of Emotion AI

The quest to enable machines to understand emotions began in the 1990s with the emergence of affective computing—a term coined by MIT researcher Rosalind Picard. This interdisciplinary field blends computer science, psychology, and cognitive science to design systems capable of detecting and responding to human emotions.

Since then, emotion AI has made significant strides. From rudimentary emotion-detection algorithms that could recognize simple facial expressions, the field has evolved to include sophisticated models that assess voice inflections, monitor physiological signals, and analyze language for sentiment and emotional undertones.

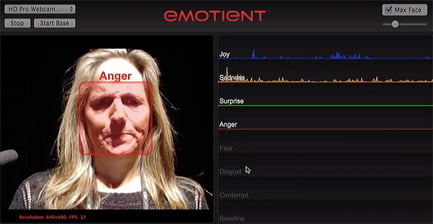

Today, various tools and platforms offer emotion AI capabilities. Technologies like Microsoft Azure’s Emotion API, Affectiva’s automotive emotion detection system, and RealEyes’ marketing engagement analytics provide businesses with valuable emotional insights. These advancements suggest that machines are gradually becoming more adept at understanding how humans feel—though still with notable limitations.

How AI Detects Emotions

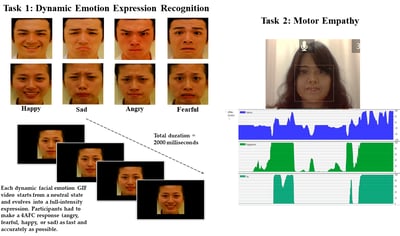

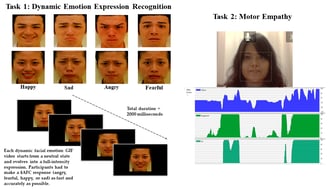

Emotion AI relies on a variety of technologies to interpret human emotions. One of the most widely used methods is facial recognition, which analyzes facial features and micro-expressions—those fleeting, involuntary facial movements that reveal genuine emotions. By mapping key facial landmarks, AI systems can infer whether a person is happy, sad, angry, surprised, or fearful.

Another method involves analyzing voice tone and speech patterns. Changes in pitch, pace, volume, and rhythm often indicate emotional states. AI can process audio data to detect signs of stress, excitement, calmness, or frustration, even when the spoken words are neutral.

Additionally, biometric sensors and physiological signals such as heart rate, skin conductivity, and pupil dilation provide deeper insights into emotional responses. Wearable devices and smart environments are increasingly leveraging this data to tailor experiences, optimize user engagement, and enhance safety—especially in sectors like healthcare and automotive.

The Ethical Dilemma of Emotion AI

As emotion AI continues to evolve, so do the ethical concerns surrounding its use. One of the most pressing issues is privacy. Emotion detection often involves collecting sensitive data—facial expressions, voice recordings, biometric signals—without explicit consent. This opens the door to emotional surveillance, where people’s feelings are monitored and analyzed without their knowledge, raising serious questions about autonomy and data rights.

There’s also the risk of emotional manipulation, particularly in marketing and political messaging. If companies can gauge a consumer’s mood in real time, they might tailor ads that exploit emotional vulnerabilities. This practice, while effective, blurs the line between personalized engagement and unethical persuasion.

Furthermore, emotion AI is susceptible to bias. Algorithms trained on limited or non-diverse datasets may misinterpret emotions, especially across different cultures, genders, and age groups. This can lead to skewed results and unfair treatment—especially in high-stakes areas like hiring, law enforcement, or mental health diagnostics. Ensuring fairness and transparency in emotion AI models is crucial to building trust and preventing harm.

The Role of Big Tech in Emotion AI

Major technology companies are playing a pivotal role in shaping the future of emotion AI. Google, Microsoft, Amazon, Apple, and Facebook have invested heavily in developing emotionally intelligent systems. From Google’s empathetic chatbot projects to Microsoft’s emotion recognition APIs and Amazon’s Alexa interpreting vocal tone, innovation is moving at a rapid pace.

These companies are not only investing in research but also acquiring start-ups and forming partnerships in the emotional AI space. For example, Apple’s acquisition of Emotient and Amazon’s patent filings for emotion detection technologies underscore the growing importance of emotional insight in product design and customer experience.

According to market forecasts, the global emotion AI market is expected to grow exponentially, driven by demand in industries such as healthcare, retail, automotive, and entertainment. However, the dominance of Big Tech also raises concerns about monopolization, data control, and ethical governance.

The Future of AI and Human Emotions

Looking ahead, the future of emotion AI holds both promise and uncertainty. Researchers envision a world where emotionally aware machines could act as empathetic companions, educators, and health monitors. The possibility of achieving emotional general intelligence—AI that can understand and respond to emotions in diverse, nuanced ways—could transform how we work, learn, and live.

But a critical question remains: will AI ever truly “feel”? While AI can mimic emotional responses and recognize patterns, it lacks consciousness, self-awareness, and subjective experience—the foundations of human emotion. Without these, machines may never possess genuine empathy or intuition.

Future developments will likely focus on improving context sensitivity, reducing bias, and enhancing interpretive accuracy. Emotion AI may not replicate human feelings, but it could become an invaluable tool for augmenting human emotional intelligence and fostering more compassionate technology.

Conclusion

Emotion AI is advancing quickly, bridging the gap between machine logic and human feeling. While current technologies can detect and interpret emotional cues with increasing accuracy, they remain limited by ethical concerns, cultural complexity, and the absence of true empathy.

The implications of integrating emotion AI into everyday life are vast—from improving mental health care to raising new questions about consent and manipulation. As we stand on the cusp of a new era in human-tech interaction, it’s vital to approach emotion AI with both enthusiasm and caution.

Ultimately, the success of emotion AI will depend not just on how well it understands us, but on how responsibly and ethically we choose to develop and deploy it.

FAQs

1. Can AI truly understand human emotions?

AI can detect and interpret emotional signals, but it doesn’t truly “understand” emotions as humans do. It lacks consciousness, empathy, and subjective experience.

2. What are the real-world uses of emotional AI?

Emotion AI is used in mental health apps, customer service bots, automotive safety systems, and personalized marketing to enhance user experience and decision-making.

3. Is it ethical to use AI to read emotions?

It depends on consent, transparency, and context. Without proper safeguards, emotional data collection can lead to privacy violations and emotional manipulation.

4. How accurate is AI in detecting human feelings?

Accuracy varies based on data quality, model design, and context. While AI is improving, it still struggles with nuance, sarcasm, and cultural differences.

5. Will AI ever develop emotional intelligence like humans?

AI may simulate aspects of emotional intelligence, but true emotional experience requires consciousness and self-awareness—traits that machines currently do not possess.